Is ChatGPT Safe? – What You Should Know

When you purchase through links on my site, I may earn an affiliate commission. Here’s how it works.

Table of Contents Show

Now, unless you've been living under a rock, you've probably heard of ChatGPT. It's the AI chatbot that's been blowing up the internet with its ability to write essays, answer questions, and even code – basically, hold a conversation that’s human-like (not really human yet, though). It's pretty mind-blowing tech, and the potential is insane.

But with all the hype, there's also a growing concern: just how safe is this thing? We're putting a lot of trust in this AI, feeding it information, and relying on it for answers. So, naturally, we gotta ask the tough questions. Can we trust it with our data? Is it going to spread misinformation? And what about the ethical implications of this kind of technology?

That's exactly what we're tackling in this blog post. I’m going to break down the safety of ChatGPT from all angles, looking at the facts, the potential risks, and everything in between. No hype, no fear-mongering, just straight-up information so you can make your own informed decisions about how you use this tech.

Think of this as your tech review for ChatGPT, but instead of focusing on specs and benchmarks, we're all about safety and responsibility. Let’s be honest: At the end of the day, AI is a tool, and like any tool, it can be used for good or bad. Let's make sure we're using it the right way.

1. How Does ChatGPT Handle User Data?

When we're interacting with any AI, especially one this powerful, we're essentially handing over our thoughts, questions, and sometimes even personal information. So, how does ChatGPT handle all that?

ChatGPT collects data on your inputs and the conversations you have. Think of it like your search history, but instead of just keywords, it's full-on dialogues. They use this data to improve the model, making it smarter and more responsive.

Now, OpenAI, the company behind ChatGPT, says they store this data securely and have policies in place to protect your privacy. But, like with any online service, there's always the risk of a data breach. And if that happens, well, it could expose your conversations and potentially even some personal info if you've shared any.

Here's the thing that kind of tripped me out: your conversations with ChatGPT aren't necessarily private. By default, they can be used to further train the model. This means that what you're talking about could be used to teach the AI, potentially even influencing its responses to other users. Now, they do give you the option to opt out of this data sharing, which is a plus. But still, it's something to be aware of, especially if you're discussing sensitive information.

Speaking of control, you do have some options for managing your data. You can delete your conversations, which is a good idea if you're concerned about privacy. But there are limitations. For example, deleting a conversation doesn't necessarily erase it completely from OpenAI's systems, especially if it was used for training purposes.

So how does ChatGPT stack up against other AI models out there? Well, it's a bit of a mixed bag. OpenAI is pretty transparent about their data practices, and they do offer some control over your data, which is more than some other companies can say. But there's definitely room for improvement. For example, they could be clearer about how long they retain data and give users more granular control over what gets used for training.

At the end of the day, AI is still in its early stages, and we're all figuring this out together. But one thing's for sure: we need more transparency and user control when it comes to our data. It's our information, and we should have a say in how it's being used!

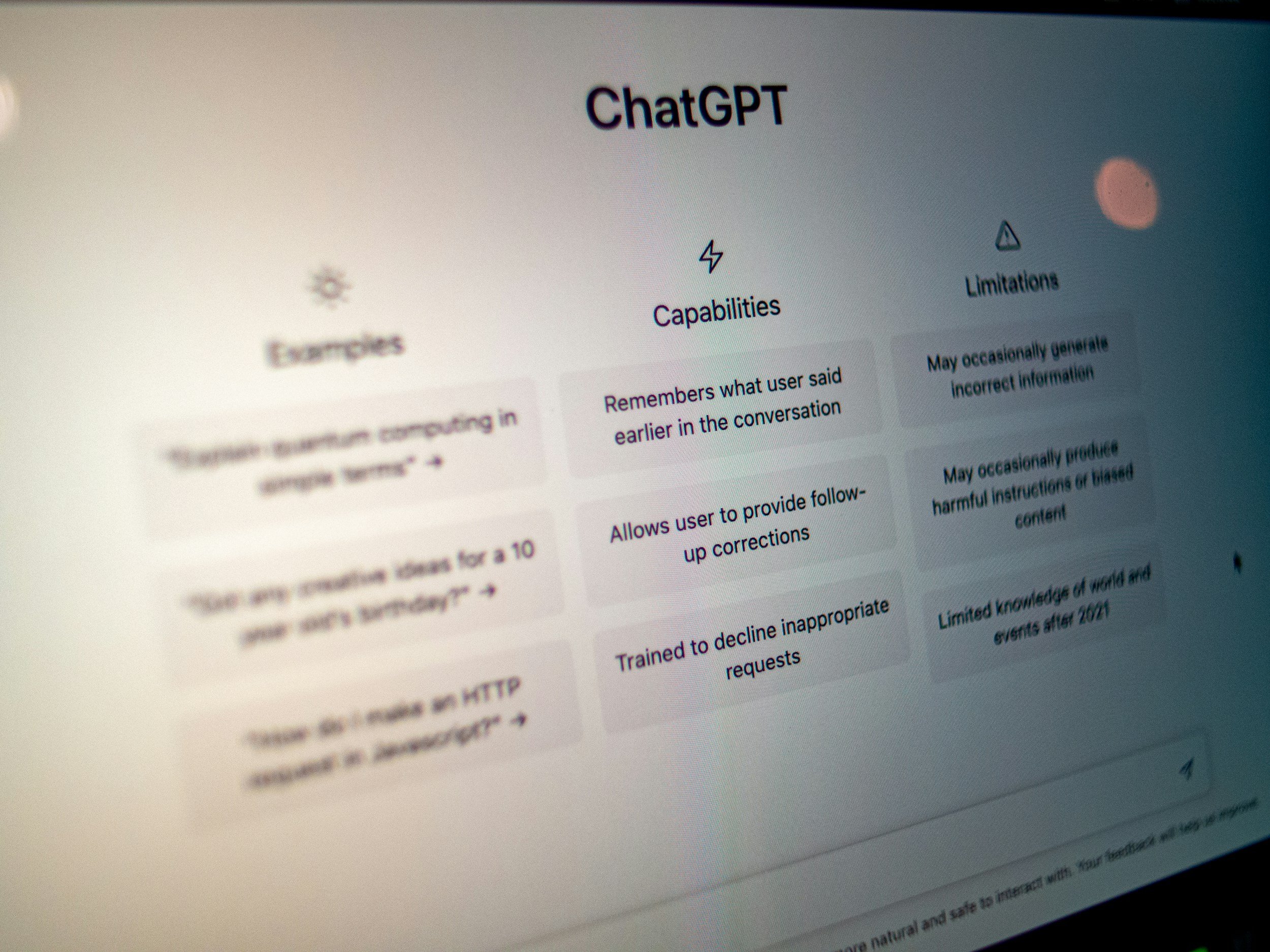

2. The Risk of Misinformation and Bias

Alright, so we've talked about data privacy, but now let's get into something even trickier: misinformation and bias. See, ChatGPT learns from a massive amount of text data, and that data reflects the biases that exist in the real world.

You can think of it like this: if you feed the AI a bunch of articles with a certain viewpoint, it's going to start generating text that leans in that direction, even if that viewpoint is inaccurate or harmful.

This can lead to some serious issues. I've seen examples of ChatGPT generating false information, like making up historical events or scientific facts. It can also perpetuate harmful stereotypes, reinforcing existing biases around gender, race, or other sensitive topics.

So, how do we deal with this? Well, I think human oversight is key. OpenAI has teams of people working to moderate the content generated by ChatGPT, trying to catch and correct these issues. But it's a massive challenge. Imagine trying to fact-check every single sentence that comes out of this AI – it's kinda like playing whack-a-mole with an endless supply of moles.

That's why it's crucial for us, the users, to be responsible and critical. We can't just blindly accept everything ChatGPT tells us as gospel. We need to do our own research, cross-check information, and use our own judgment. Think of it this way: you wouldn't trust a random stranger on the internet with your life savings, right? Same goes for AI.

Here are a few tips to avoid getting caught by AI-generated misinformation:

Be Skeptical

Don't take anything at face value. If something sounds off or too good to be true, it probably is.

Cross-Check

Verify information from multiple sources. Don't rely on ChatGPT as your sole source of truth.

Look for Sources

Ask ChatGPT for its sources and check if they're credible.

Use Your Judgment

If something feels biased or discriminatory, trust your gut.

Ultimately, AI is a reflection of ourselves and the data we feed it. It's up to all of us to be responsible users and critical thinkers to ensure that AI is used for good, not for spreading misinformation.

3. The Risk of Malicious Use

Okay, we've covered data and bias, but let's talk about the darker side of AI: malicious use. Because with great power comes, well, you know the rest. ChatGPT's ability to generate human-like text makes it a potential tool for scammers and those with bad intentions.

One area where this is a real concern is phishing and social engineering. Imagine getting an email that looks like it's from your bank, asking you to update your account information. But it's not your bank at all. It's a scammer using ChatGPT to craft a super convincing message designed to steal your info. This is where things get tricky because these AI-generated phishing attempts can be much more sophisticated and personalized than the usual spam we're used to.

So, how do you protect yourself? Here are a few things to watch out for:

Suspicious Requests

Be wary of any unsolicited requests for personal information, especially if they come via email or social media.

Check the Sender

Double-check the sender's email address or profile to make sure it's legitimate. Look for any inconsistencies or misspellings.

Don't Click on Links

Avoid clicking on links in emails or messages from unknown senders.

Trust Your Gut

If something feels off, it probably is. Don't be afraid to contact the supposed sender directly to verify the request.

But phishing is just the tip of the iceberg. ChatGPT can also be used to generate other types of harmful content, like hate speech, propaganda, and even fake news articles (yes, even with OpenAi’s limits in place). This stuff can spread like wildfire online, influencing opinions and potentially inciting violence. It's a serious ethical dilemma, and we need to be aware of the potential consequences.

4. Safety Measures and Some Best Practices

So we've talked about the potential risks of ChatGPT, but it's not all doom and gloom. OpenAI is aware of these challenges and they're actively working on safety mitigations. They've got a whole team of researchers and engineers dedicated to making this AI safer and more reliable.

Here's a rundown of some of the safety measures they've implemented:

Training Data Filtering

They're constantly refining the data used to train ChatGPT, trying to remove biases and harmful content.

Content Moderation

They use a combination of AI and human reviewers to monitor and flag inappropriate or harmful outputs.

Safety Guidelines

They've established clear guidelines for what kind of content is allowed and not allowed, and they're constantly updating these as they learn more.

User Feedback

They encourage users to report any issues or problematic outputs they encounter, which helps them improve the system.

Red Teaming

They have "red teams" who actively try to break the system and find vulnerabilities, so they can be addressed.

These measures are definitely a step in the right direction, but let's be real, no system is perfect. There will always be ways to misuse AI, and there will always be challenges in keeping it completely safe. That's why user responsibility is also important.

Note: I am NOT implying that the primary responsibility doesn’t lie with OpenAI – because it certainly does! They are the ones responsible for ensuring ChatGPT is safe. However, I still think, to some degree, we, the users, have a role to play in ensuring the safe use of ChatGPT. I like comparing it to driving a car: you've got safety features like airbags and seatbelts, but you still need to drive responsibly and follow the rules of the road.

Here are a few tips for using ChatGPT safely:

Don't Share Personal Info

Avoid sharing sensitive information like your address, phone number, or financial details!

Be Critical of the Output

Remember that ChatGPT can generate incorrect or biased information. Always double-check and use your own judgment.

Report Any Issues

If you encounter any harmful or inappropriate content, report it to OpenAI. This helps them improve the system and prevent similar issues in the future.

Please Use It for Good

I know this sounds cheesy but please try to focus on using ChatGPT for positive purposes like learning, creating, and exploring new ideas.

Final Thoughts

Let's wrap things up with the big picture. Is ChatGPT safe? Well, like with most tech, it's not a simple yes or no answer.

ChatGPT is a powerful tool with incredible potential, but it also comes with risks. We've seen how it can be misused to spread misinformation, generate harmful content, or even trick people with sophisticated phishing scams. But we've also seen what good it can do – and that OpenAI is taking steps to address these issues as they're constantly working to improve the safety and reliability of ChatGPT (at least that’s what they’re saying).

The key takeaway here is that AI safety is an ongoing process. It requires collaboration between the creators, the users, and the wider community. I think we need to keep researching, discussing, demanding, and debating these issues to ensure that AI is developed and used responsibly.

So, what can you do? First off, be a responsible user. Please think critically about the information you get from ChatGPT, don't share personal details, and report any issues you encounter. But beyond that, stay informed and engaged in the conversation about AI safety. Read articles, follow experts, and participate in discussions online. The future of AI is being shaped right now, and we all have a role to play.

And of course, I want to hear from you guys! What are your thoughts on ChatGPT and AI safety? Have you encountered any issues or have any concerns? Drop a comment below or hit me up on social media, let's keep this conversation going.

Also don’t forget to subscribe to my tech newsletter for the latest news on AI and other technologies.

Thanks a bunch for reading – I'll catch you in the next one.

FAQ

-

Nope! ChatGPT is super smart, but it's not sentient. It doesn't have emotions or consciousness. Think of it like a really advanced parrot that can mimic human conversation. It can string words together in a way that makes sense, but it doesn't actually understand what it's saying.

-

It's best to be cautious. While OpenAI has security measures in place, there's always a risk with sharing personal info online. Avoid giving out things like your address, phone number, or financial details. Treat it like any other online service – be mindful of what you share.

-

Technically, yes. But should you? Probably not. Using AI to do your work for you defeats the purpose of learning. Plus, many schools are already using tools to detect AI-generated text. Focus on using ChatGPT as a learning tool, not a shortcut.

-

It's a valid concern. AI will definitely change the job market, and some jobs might be automated. But it will also create new opportunities. The key is to adapt and learn new skills. Think of AI as a tool that can help you do your job better, not as a replacement for you.

-

It's getting harder and harder to tell, but there are some clues. AI-generated text can sometimes sound repetitive or have a slightly unnatural flow. It might also struggle with complex reasoning or nuanced arguments. But honestly, with the way AI is evolving, it's becoming increasingly difficult to detect.

-

Stay informed! Educate yourself about the potential risks and benefits of AI. Use AI responsibly and ethically. And most importantly, participate in the conversation! Share your thoughts, concerns, and ideas with others. I think we all have a role to play in shaping the future of AI.

MOST POPULAR

LATEST ARTICLES